Subscribe

Many of our customers have asked whether Coverity can detect Heartbleed. The answer is not yet – but we’ve put together a new analysis heuristic that works remarkably well and does detect it (UPDATE: the Coverity platform now detects the Heartbleed defect). We wanted to tell our customers and readers about this heuristic and what it shows about the way we approach static analysis.

John Regehr blogged (1) last week about Coverity Scan, our free scanning service for the open source community. While there were interesting defects found in OpenSSL, Heartbleed was not among them. After adding the new heuristic designed to catch this and other similar defects, we shared our updated results with John and he was gracious enough to write a follow-up blog (2), which we think is fantastic.

Initially, we wanted to independently verify the results so we ran the latest production version of our analysis (7.0.3) against openssl-1.0.1. Coverity Scan uses a particular set of analysis options, and we wondered if different settings might cause the defect to appear. After a few experiments, we determined that analysis settings didn’t make a difference for this particular defect.So we dug into the code further to determine why. At its heart, Heartbleed is an out of bounds memory read based on tainted data being used as an argument to memcpy. The main difficulty in detecting it is in realizing the source data is tainted. Most of the descriptions of Heartbleed begin with this line:

unsigned char *p = &s->s3->rrec.data[0]

But for a static analysis, it is not obvious that the field data is tainted, and finding the evidence for this in the program can be difficult. One illustration of this is in the definition of the structure that contains data:

typedef struct ssl3_record_st

{

/*r */ int type; /* type of record */

/*rw*/ unsigned int length; /* How many bytes available */

/*r */ unsigned int off; /* read/write offset into 'buf' */

/*rw*/ unsigned char *data; /* pointer to the record data */

/*rw*/ unsigned char *input; /* where the decode bytes are */

/*r */ unsigned char *comp; /* only used with decompression - malloc()ed */

/*r */ unsigned long epoch; /* epoch number, needed by DTLS1 */

/*r */ unsigned char seq_num[8]; /* sequence number, needed by DTLS1 */

} SSL3_RECORD;

The comments aid human comprehension, but static analysis doesn’t benefit much from them. Instead, we attempt to trace the flow of tainted data from where it originates in a library call into the program’s data structures. This can be difficult to do without introducing large numbers of false positives, or scaling performance exponentially poorly. In this case, balancing these and other factors in the analysis design caused us to miss this defect.

Program analysis is hard and approximations and trade-offs are absolutely mandatory. We’ve found that the best results come from a combination of advanced algorithms and knowledge of idioms that occur in real-world code. What’s particularly insightful is to analyze critical defects for clues that humans might pick up on but are hard to derive from first principles. These patterns form pieces of evidence that can then be generalized and tested empirically to make the analysis “smarter.” Our experience is that this is the only way to build analyses that scale to large programs with low false positive rates, yet find critical defects. Many program analysis problems are undecidable in general, and in practice NP-complete problems and severe time/space/accuracy trade-offs crop up everywhere. Giving the analysis intuition and developer “street smarts” is key to providing high quality analysis results.

The Heartbleed bug is a perfect example of this. I sat down with one of our analysis engineers to examine whether there was any hope for finding this defect in a smarter way. It seemed bleak. The flow of tainted data into the data field was convoluted, and even manually we had a hard time understanding exactly how the code worked.

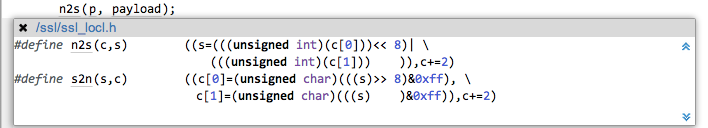

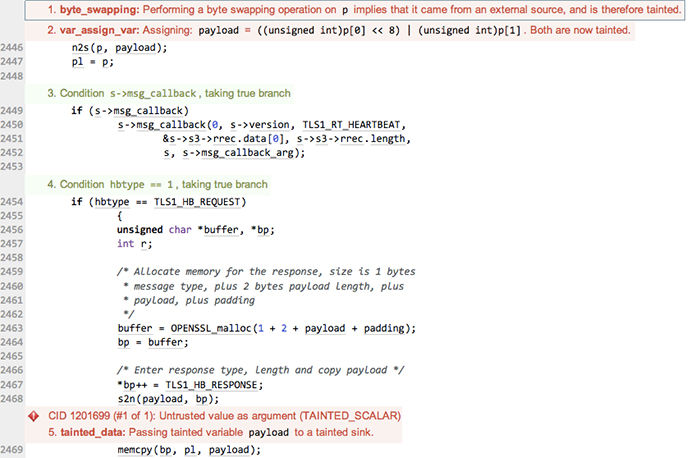

Then we noticed that the tainted data was being converted via n2s, a macro that performs byte swapping:

Byte swaps are relatively rare operations. They can occur in cryptographic and image processing code, but perhaps the most widespread use is to convert between network and host endianness (e.g. ntohs). We had a hunch that byte swaps constitute fairly strong evidence that the data is from the outside network and therefore tainted (this also applies to reading a potentially untrusted binary file format such as an image). In addition to byte swapping, we also look for the bytes being subsequently recombined into a larger integer type. We also require that the tainted value flows into a tainted sink, such as an array index or, as in this case, a length argument to a memory operation. These additional conditions help avoid false positives when byte swapping is being used in a situation which isn’t tainted. For example, outgoing data that is byte swapped is unlikely to flow into a tainted sink.

With that, Heartbleed revealed itself.

This heuristic bypasses the complex control-flow and data-flow path that reaches this point in the program, and instead infers and tracks tainted data near near the point where it is used. It generalizes to all programs that use byte swaps so it is not overly specific to OpenSSL. Nor is this restricted to intraprocedural cases. We’ve added this heuristic to the derivers that compute function summaries, so any tainted data inferred is automatically propagated throughout the rest of the program. By collecting this and other similar idioms together, we can pick up large amounts of tainted data without any codebase-specific modeling.

Beyond Heartbleed, we found a handful of additional issues in OpenSSL with this heuristic which we are investigating. We believe (hope?) they are false positives. If they are, we will further tune the analysis to understand why. Even without such tuning, we have not seen an “explosion” of false positives. The entire set of results, including the new heuristic, will be made available to a selected group of users on the OpenSSL project on Coverity Scan shortly.

We plan on performing additional experiments on a larger corpus of code including 60M+ lines of open source and some additional proprietary code to validate our assumptions and determine if there are other common idioms for use of byte swapping that do not imply taintedness. These steps are part of our standard process for vetting all analysis changes before releasing them to our customers.

Continue Reading

AI in DevSecOps: A critical crossroads for security and risk management

Oct 08, 2025 | 6 min read

Key findings from “The State of Embedded Software Quality and Safety 2025” report

Aug 26, 2025 | 3 min read

What you need to know about the NIST Secure Software Development Framework

Aug 12, 2025 | 5 min read